This post is authored by Kavita Chodavarapu, Head of Quality Engineering at Airtable, and Karan Gathani, Software Engineer in Test at Airtable.

The Quality Engineering group at Airtable aims to set a high bar for software testing at Airtable, and we frequently use Airtable to track the testing process.

But a few months back, we realized that our process involved many manual steps. So we made an effort to automate those manual steps, which resulted in a dramatic streamlining of task assignments and a savings of 70% in Quality Engineering team bandwidth for every new software release.

Here’s how we did it:

The Quality Engineering group is close to 27 engineers working from various parts of the U.S. and India. We are a remote-first team, meeting on a quarterly basis in Airtable’s Mountain View office for in-person collaboration and team bonding.

Our group uses Airtable for several internal workflows. One of the use cases is to manage our software product development lifecycle (SDLC). Our engineering teams use the agile development method, with two weeks of cross-functional team members working and iterating on developing and testing code. Once testing is complete, the new version of the code is released to our customers.

Project 1: Automating task assignment

During the SDLC, after engineers complete writing code, the quality engineers on the team are assigned to test code changes. Examples of testing tasks include finding bugs or anomalies in our product, ensuring the product is compliant with functional and non-functional requirements, and getting bugs resolved by developers.

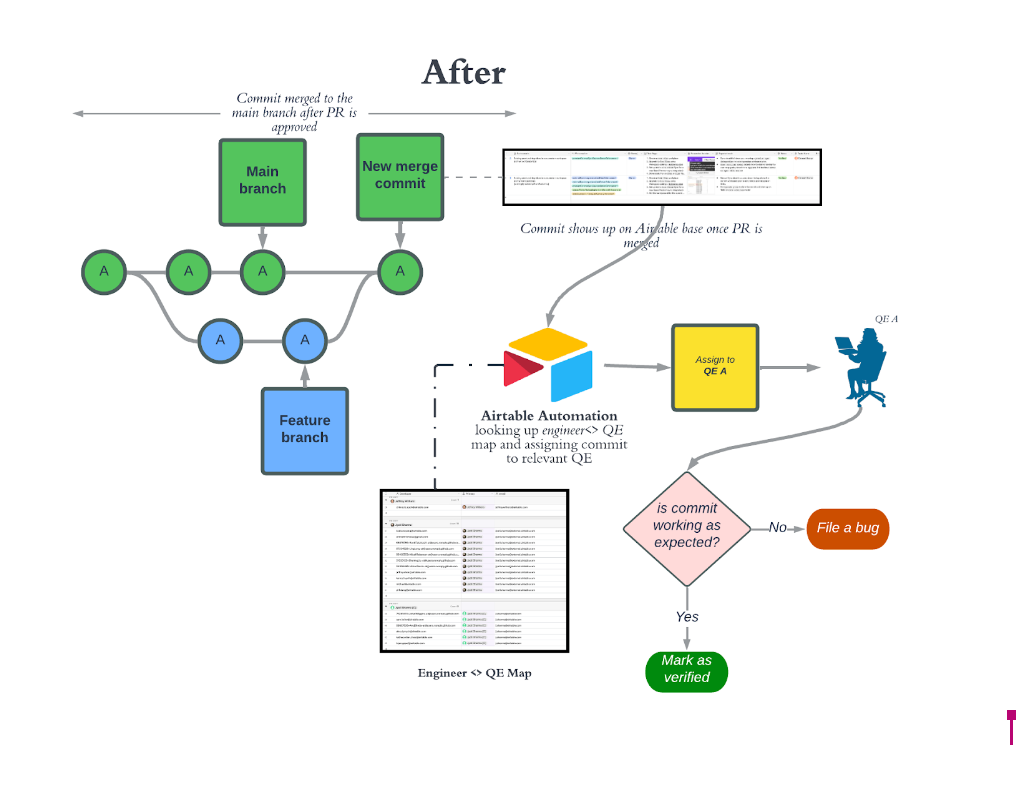

The QE group uses an Airtable base to track things like pull requests, or small changes to the software. In that base, the team tracks which pull requests have been merged to the main branch, a central repository. It also shows which are ready for testing.

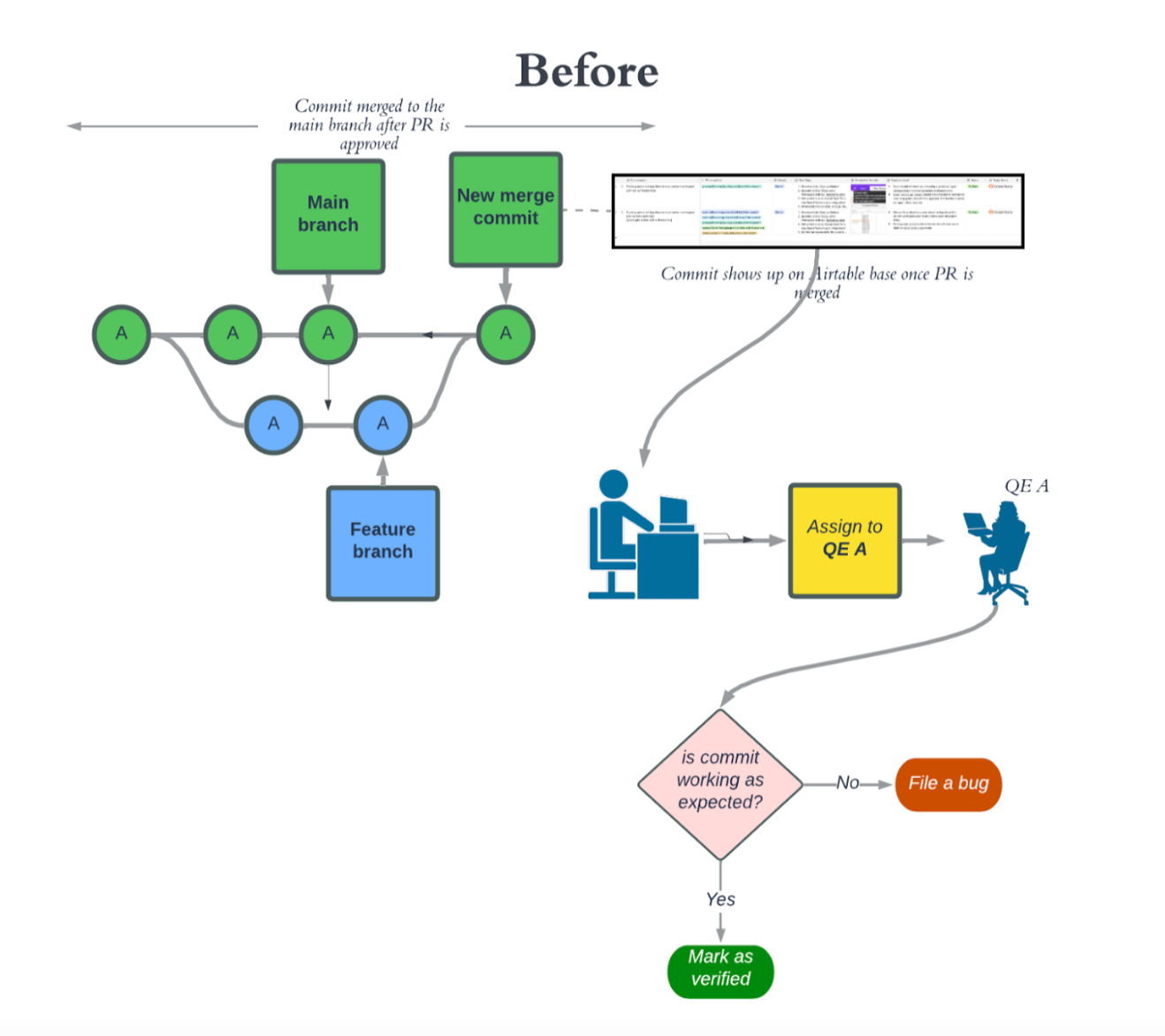

In the past, assigning tasks meant a Quality Analyst with knowledge of both the engineering team and the QE team matched up an engineer with a corresponding QE.

The QE would then validate changes the engineer made in the code. Keeping up with these match-ups was a full-time job. With new people joining the company every week, the process quickly became unscalable. Work would come to a screeching halt any time the Quality Analyst was out, and no one else had a grasp on the engineer-QE connections.

We needed to codify and automate a process that previously only existed in one person’s head.

In other words, we needed to create a table that showed those engineer-QE connections. This would require quite an effort to chart, since there are hundreds of engineers at the company and only a handful of QEs. Another wrench in the plan: with new engineers joining the team each week, someone had to manually keep the table up to date.

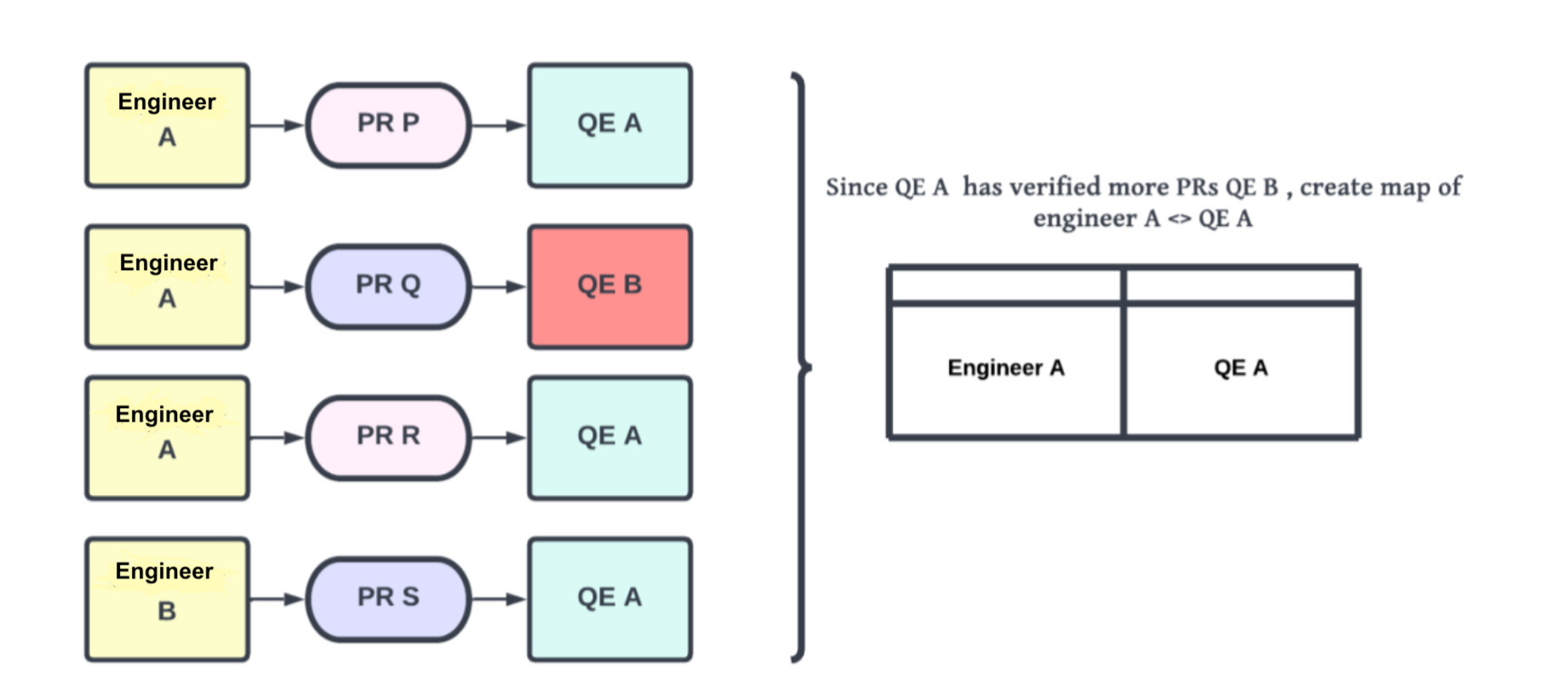

We wanted to leverage the rich history and existing data in our Airtable base, which creates a record each time a QE gets matched up to verify the pull request from a specific engineer. Since we have historical records of all pull requests verified by QEs, we can run an Airtable script action to automatically look up all instances in which an engineer has had a corresponding QE verify their change.

Our teams use a rolling 60-day window to pull information on these interactions (it wouldn’t be that relevant to track which QEs were verifying pull requests from engineers a year ago). Viewing that information in Airtable gives us a picture of the working relationships and connections. Airtable automation also lets us modify records in the base after looking up the relevant QE for that pull request.

A daily job triggers this script to keep the information relevant. In some cases, there’s no past match-up to tell the script which QE should get a pull request.

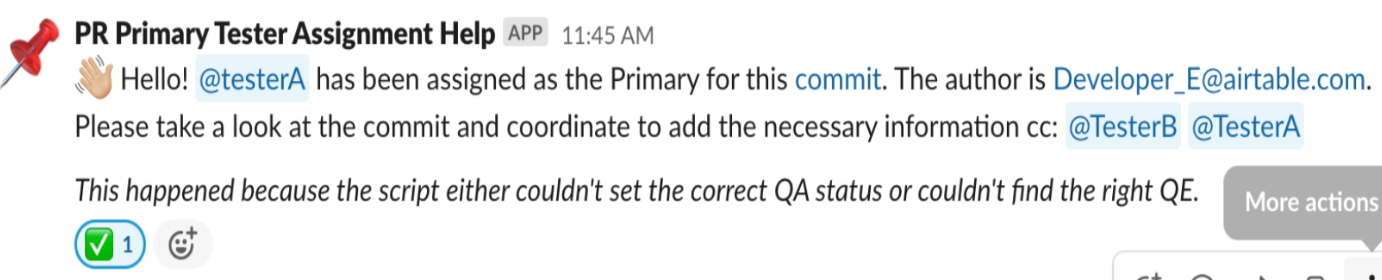

When that happens, we assign the task to a default QE and then post details of that pull request in a Slack channel to crowdsource the effort of assigning it for the first time. Once assigned, the script remembers the association between the specific engineer and QE.

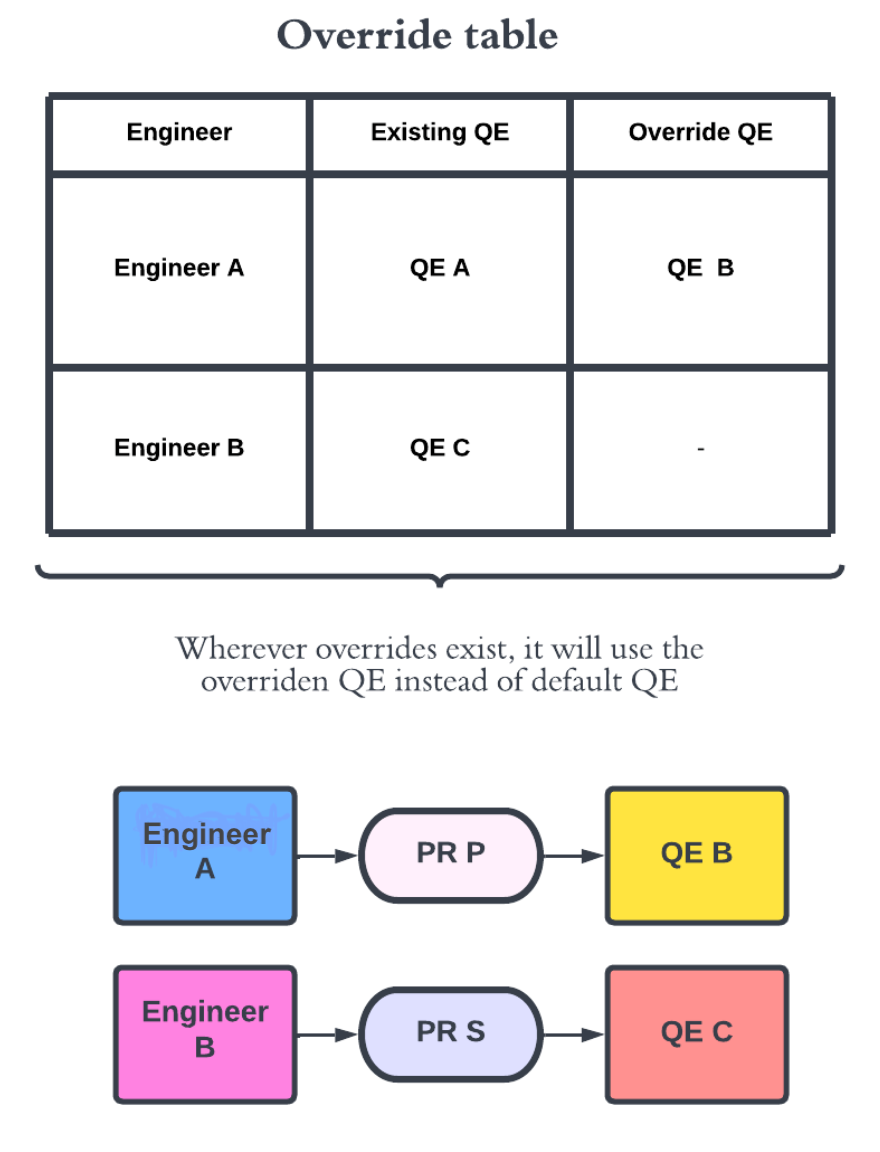

Another tricky scenario: when a new QE joins the team (or a current QE is on leave), we designed a simple program in Airtable that cancels out the previous match-up between QE and engineer and automatically assigns the task to a new QE, whoever is available. This program can be maintained manually or synced with Google Calendar.

In addition, the QE team is notified when a pull request is assigned to them once that request is live in a test environment.

Our fully automated system can assign tasks to quality engineers around the clock—which is helpful for a remote team working in disparate timezones. It even assigns tasks if they come in after regular business hours or over the weekend.

Implementing the system was a great win for the release process at Airtable, saving the team some 45 hours per week.

But the team decided to take our automation a step further. We now have a system that regularly sends Slack alerts to QEs to update them on the verification status of a pull request before the release goes out to customers. Once all requests are verified by the QEs, the release is automatically approved.

The new automated system means pull requests are verified and releases authorized— without human intervention—99% of the time. This step alone has saved the Quality Engineering team around 16 hours of work per week.

Project 2: Testing third-party integrations

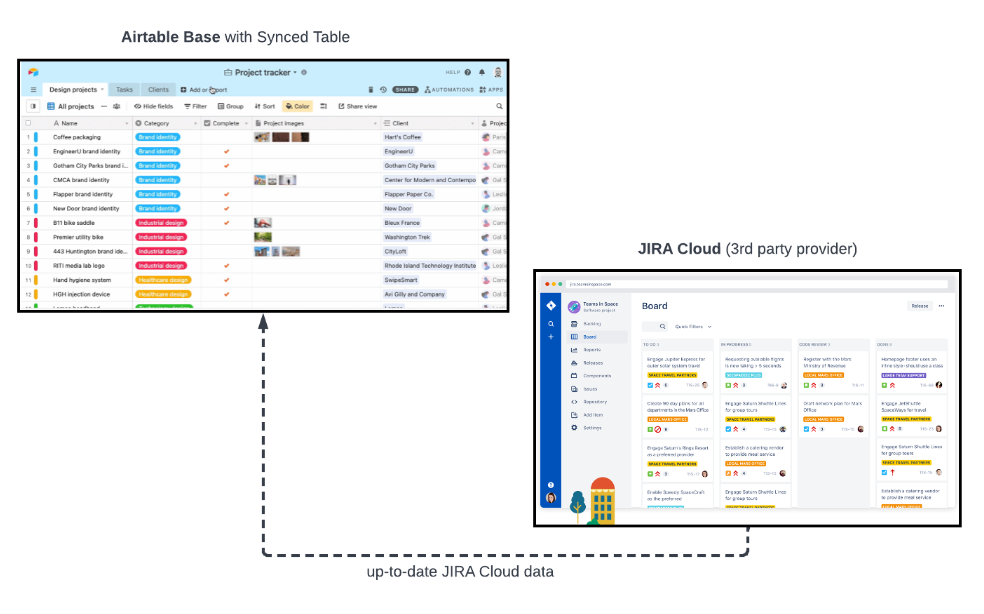

Airtable has several third-party integrations that allow importing or exporting data to and from an Airtable base. One of the ways we leverage those third-party integrations is by using synced tables. Using Airtable Sync, you can sync records from a third-party provider like JIRA Cloud or Salesforce to one or more destination bases to create a single source of truth across your organization.

This presented two potential areas to test:

- Would a third-party API still send data formatted in a way that lets an Airtable base read and use it?

- Would the sync be set up in a way that updates values on a periodic basis in our Airtable base?

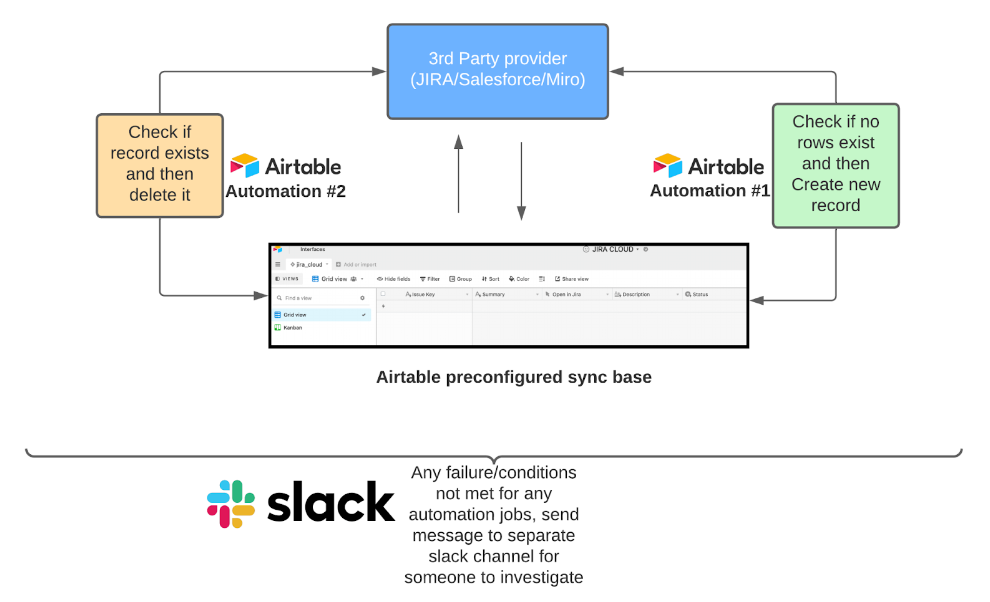

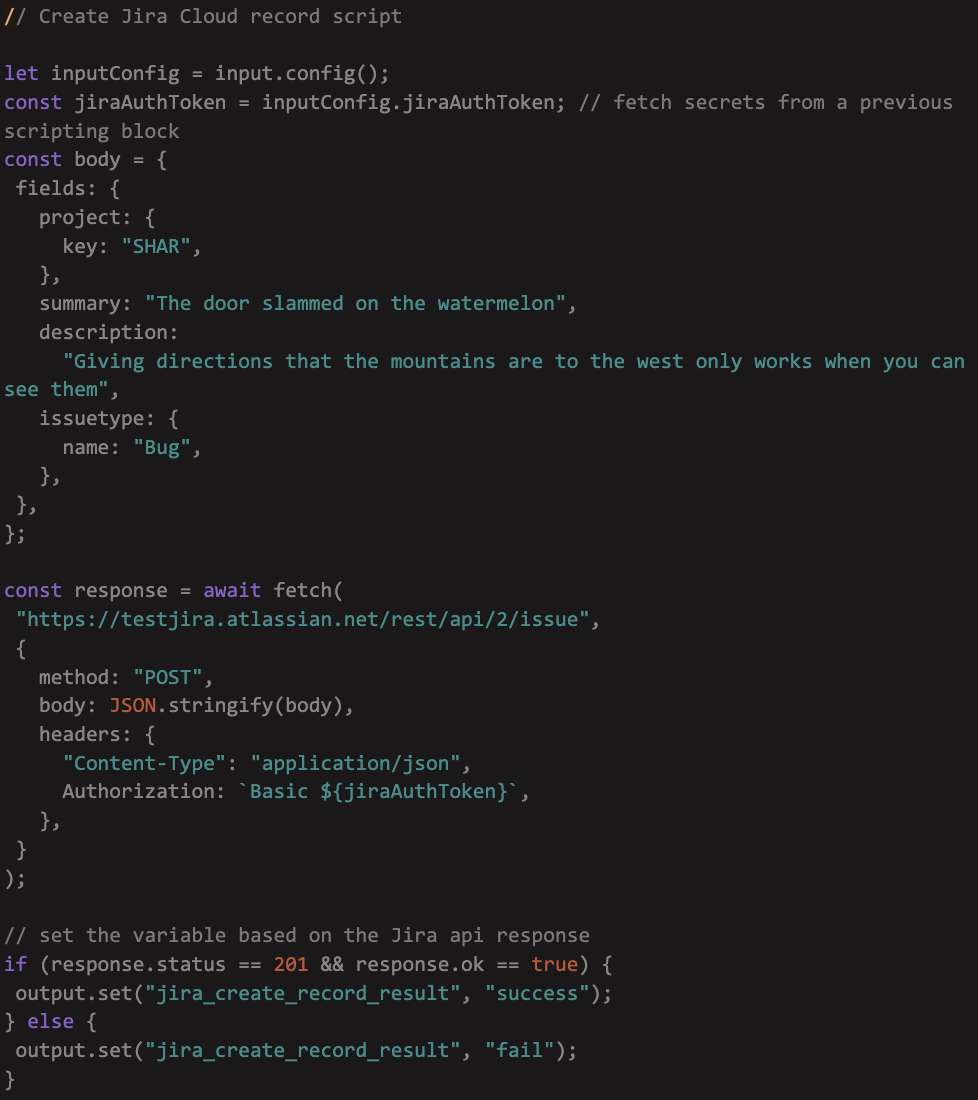

We decided to use an Airtable automation that lets users configure custom trigger-action workflows directly within an Airtable base. An Airtable base lets users write custom scripts that can be used to initiate REST API calls. A REST API call lets Airtable request and retrieve information from another program—in this case, JIRA Cloud. Then it creates records and verifies them using the “Find records” action block. Since automations can be triggered using a time interval, we can set it to run in a way that fits the team’s schedule.

Two actions happen in quick succession. A script creates a record in JIRA Cloud, and another script deletes the previously created record. For both actions, we check to see that our synced table has updated with the latest values from JIRA Cloud.

The code for the automated custom script action, used to create a new record in JIRA, looks like this:

Automating the health checks for these synced tables helps save 70% of QE bandwidth for every release.

Acknowledgments

Huge thanks to Swati Gogineni, Luiz Gontijo Jr, Jeffrey Withers, Marci Genden, and Rekha Ramesh for contributions on these QE projects, and to Kah Seng Tay for reviewing and providing feedback on this article.